© Hong Kong Academy of Medicine. CC BY-NC-ND 4.0

MEDICAL PRACTICE

High-fidelity simulation training programme for

final-year medical students: implications from the perceived learning

outcomes

YF Choi, FHKCEM, MSc(Clinical Education)(Edin)1,2,3;

TW Wong, FHKCEM, FHKAM (Emergency Medicine)1

1 Accident and Emergency Department,

Pamela Youde Nethersole Eastern Hospital, Chai Wan, Hong Kong

2 Programme Director, Nethersole

Clinical Simulation Training Centre, Hong Kong

3 Medical Director, Hong Kong Fire

Services Department, Hong Kong

Corresponding author: Dr YF Choi (choiyf@gmail.com)

Abstract

We designed a session of high-fidelity simulation

training course for final-year medical students in their emergency

medicine specialty clerkship. This was a new initiative with clearly

defined learning outcomes. We aimed to evaluate the learning outcomes.

Students completed an evaluation form at the end of the session focusing

on their perceived learning outcomes. Thematic analysis was conducted

for data processing. We collected responses from 149 students. In

addition to the intended outcomes of the course, students gained

unexpected learning outcomes from the training and some of them matched

a few identified learning gaps between undergraduate medical education

and their subsequent transition to early clinical practice that have

been described in the literature. High-fidelity simulation

training in medical school could be an effective tool to address some of

the identified gaps in the transition between undergraduate medical

education and postgraduate practice.

Introduction

Clinical simulation training has become more widely

practised in local hospitals and academic healthcare institutions in the

past decade, with a wide range of training modalities, from part-task

simulator to full-body manikin and from single skill training to

interprofessional team-based training.

The Nethersole Clinical Simulation Training Centre

is a hospital-based training centre which has advocated high-fidelity

multidisciplinary team training for hospital staff since its establishment

in 2012 in Pamela Youde Nethersole Eastern Hospital. From 2008, the

emergency department of the hospital was one of the centres for teaching

emergency medicine specialty clerkship medical students from one of the

medical schools in Hong Kong. In 2017, the emergency department

collaborated with the Nethersole Clinical Simulation Training Centre in an

initiative to design a high-fidelity simulation training session for

medical students.

In the past, medical students in local medical

school did not have much high-fidelity simulation training because medical

schools did not have high-fidelity training facilities. Furthermore, it

was conventionally believed that high-fidelity simulation training is more

beneficial for expert-level learners and that low-fidelity training was

more suitable for beginners, because the sophisticated context in the

high-fidelity environment might potentially jeopardise learning objectives

by creating excessive cognitive burden.1

2 3

Medical students, owing to their low clinical exposure, were regarded as

beginners.

However, a recent study suggested that once medical

students have learnt some basic skills, high-fidelity simulation training

might benefit learners through psychological immersion, despite the extra

cognitive burden.4 Therefore, we

decided to trial high-fidelity simulation training for final-year medical

students, who have already completed most of their academic studies and

have acquired some basic practical skills.

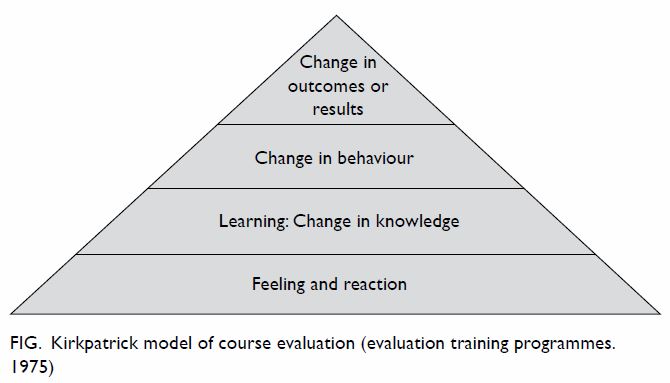

The prototype of the simulation training course was

started in 2017 and at that time we mainly evaluated the “reaction” phase

of the learners which is the basic level of outcome evaluation according

to Kirkpatrick’s model (Fig). The feedback from the students was very

positive and they welcomed the course very much.5

Encouraged by this, we revised the course material in 2018 with written

intended learning outcomes. In the present study, we evaluate “learning”,

which is a level higher in the Kirkpatrick’s model.

The aim of the present study was to evaluate the

learning outcomes of a high-fidelity simulation training course for

final-year medical students.

Methods

Design

This was a qualitative study by a survey in

English. The contents were checked against SRQR reporting guideline

(Standards for Reporting Qualitative Research 2016 version).

Setting

The training course was conducted in the Nethersole

Clinical Simulation Training Centre that has a simulation training suite

with isolated simulation rooms and a debriefing room. The simulation rooms

are equipped with ceiling-mounted cameras for video-assisted debriefing.

The simulation room used was prepared to resemble the environment of a

resuscitation room in the emergency department with a full-body

high-fidelity manikin.

Course design

The training course consisted of a brief

introduction followed by four short case scenarios in a 3-hour session. In

the introduction, students were briefed about course structure, learning

objectives, and clinical simulation rules and underwent a short simulation

laboratory familiarisation session. A group of seven to eight students

further divided into two subgroups played the four scenarios in turn.

While one subgroup was doing the scenario, the other subgroup observed

from the debriefing room via the audio-visual system. The instructor wore

a nurse uniform and acted as an experienced nurse in the scenarios, which

included no real-time coaching. In the four scenarios all the patients

presented with acute or even life-threatening conditions that need prompt

treatment. Such a design simulated the high psychological fidelity of real

life. Participants had to treat the patients on their own with no guidance

from the instructor. A debriefing session was carried out immediately

after each scenario.

Data collection

A cohort of student participants in 2018 were

selected by convenience sampling and they were asked to complete an

evaluation form immediately after the session. The evaluation form invited

the students to offer free text only with no rating scale. They could

write up to three perceived learning outcomes, up to three reflections

after the course and any extra comments or suggestions about the course.

There was no space to enter the name of the students, so the data

collection was completely anonymous.

Data analysis

The findings in the evaluation form were processed

through thematic analysis shortly after each course and compared with the

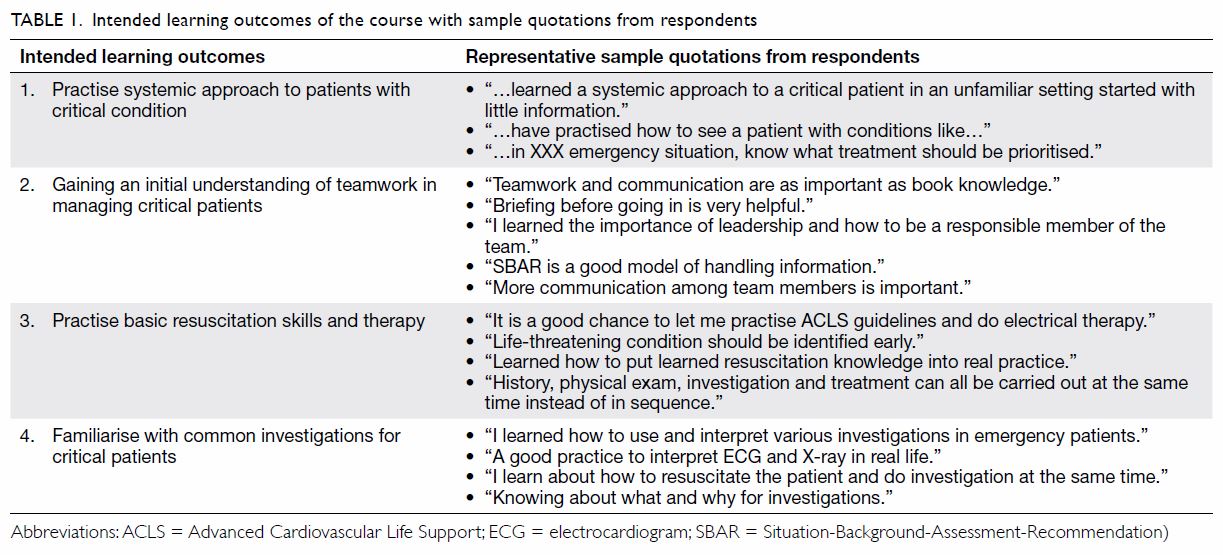

intended learning outcomes of the course (Table 1). The intended learning outcomes were

designed to include both clinical learning outcomes and teamwork learning

outcomes such as briefing, debriefing, communication, situation awareness,

and leadership. The clinical learning outcomes, owing to their diversity

were further divided into three separate categories (general approach to

critical patients, use of investigations and resuscitation skills) while

all the teamwork-related learning outcomes are grouped under one category.

The written learning outcomes were first coded under these intended

learning outcomes. Items not fitted under the intended learning outcomes

were considered new findings. These new findings were further processed

newly identified themes. The results of thematic analysis were checked

serially by the authors for quality assurance. Consensus on new themes

after further literature review was made between authors on items that

could not be categorised under the intended learning outcomes.

Results

Data saturation was attained after 20 sessions with

149 evaluation forms collected (data saturation is a point when no new

theme was found by thematic analysis after analysing the responses after

several courses). The response rate was 100% and more than 99% (148 out of

149) of the returned forms were complete, despite the voluntary nature of

data collection.

The intended learning outcomes are listed in Table

1. From the contents of the evaluation form, all of the intended

learning outcomes were well received by the respondents, as reflected by

the responses shown in Table 1.

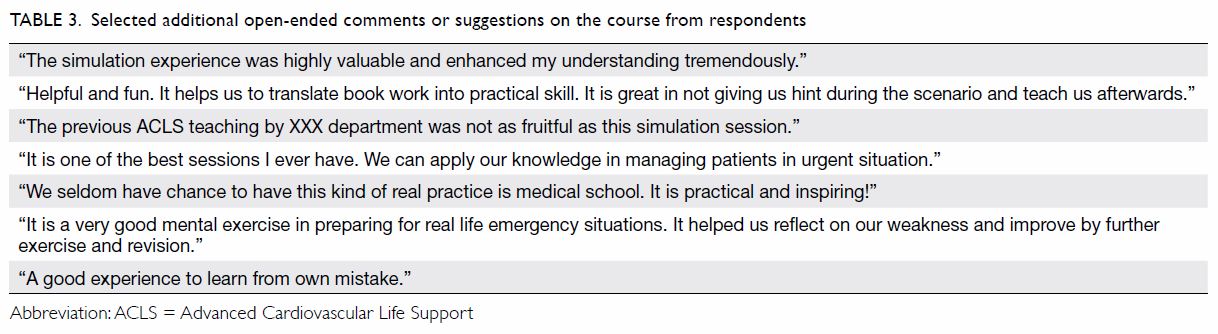

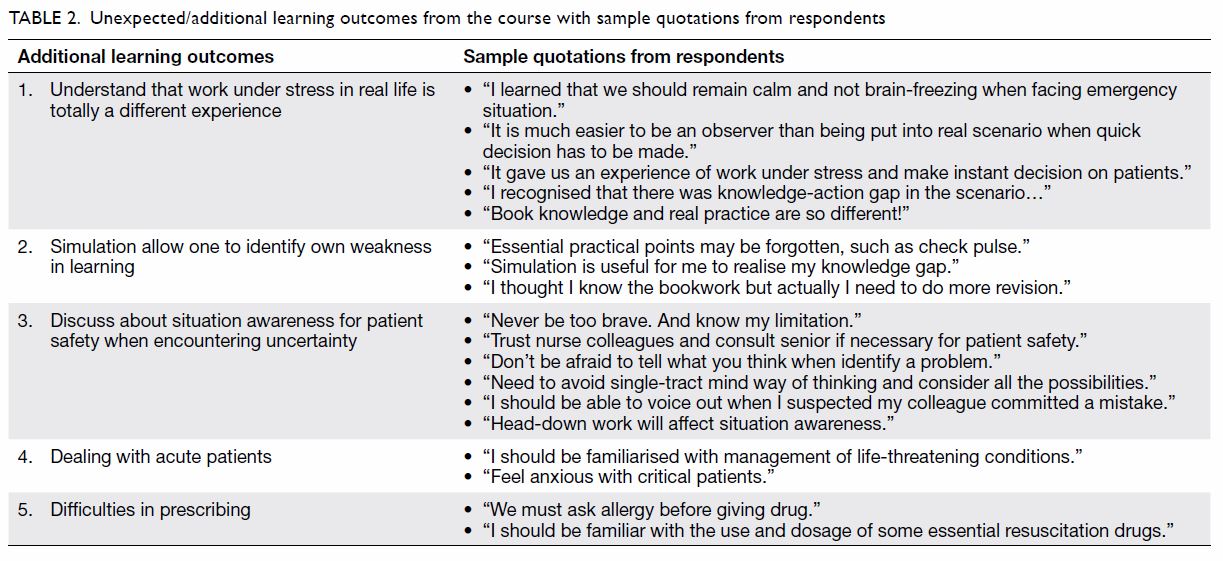

In addition to the intended learning outcomes, we

discovered a large number of learning points or reflections that cannot be

categorised under our pre-planned learning objectives. These additional

unexpected learning points were processed by thematic analysis under new

headings as listed in Table 2.

Table 2. Unexpected/additional learning outcomes from the course with sample quotations from respondents

Respondents were also given the opportunity to make

open comments or suggestions about the course. Some of the responses are

listed in Table 3.

Discussion

The original intention of course evaluation

questionnaire was to evaluate the learning objectives as perceived by

students. The results suggest that our intended learning outcomes were

well received by our students. A number of learning outcomes were revealed

that did not fall into our intended learning outcomes by thematic

analysis. Additional literature review revealed that these unexpected

outcomes match some gaps identified in the transition between

undergraduate medical education and postgraduate practice.

Fidelity

In our training course, high fidelity was thought

to be an important element to achieve the learning outcomes, including the

unexpected ones. Fidelity is commonly defined as “the level of realism

present to the learners during a simulation training”.3 4

Conventionally, it is believed that higher fidelity leads to more

efficient learning.6

However, fidelity is not a single-dimensional

concept and there are different components of fidelity described in the

literature using different terminology. For simplicity, we consider the

definition of fidelity described by Feinstein and Cannon, in which

fidelity of simulation has physical and functional aspects.3 Physical fidelity includes the environmental, visual,

and spatial components, such as the design of the simulation room, the

performance of manikin, and the settings of various instruments.

Functional fidelity is a dynamic interaction between the participants and

their task, including information, stimuli, and responses of learners.

Other simulation educators have used the terms “conceptual”,

“experiential”, “emotional” or more commonly “psychological” fidelity to

describe the same or similar concepts,2

7 but further discussion is beyond

the scope of the present study.

High-fidelity simulation training was not always

regarded as superior to lower-fidelity training. Besides the issue of

cost, early studies in the last century in various disciplines (such as

civil and military aviation) failed to show better learning outcomes after

high physical fidelity training.3 8 9

10 This might be because the high

physical fidelity training over-stimulated novice learners and resulted in

cognitive overload that jeopardised the intended learning objectives.11 Such findings supported the conventional belief that

low fidelity is better for beginners and high fidelity is for expert

learners. Medical students are regarded as novice learners and have little

or no working experience. These conventional beliefs lead to the

conclusion that it is unreasonable and not cost-effective to expose

medical students to high physical fidelity simulation training. However,

this overlooks the importance of functional or psychological fidelity. In

recent years, studies have suggested that functional or psychological

fidelity is important in enhancing learning.7

12 13

14 15

In addition to simulating real-world functioning, high psychological

fidelity also creates a stressful environment, increasing student arousal

level and facilitating learning and performance. Therefore, in our

training course, in addition to the relatively high physical fidelity

provided by the well-equipped simulation training room and sophisticated

manikin, we further enhanced functional or psychological fidelity by the

following interventions:

All of these measures enhanced functional fidelity

by providing a realistic working environment and imposed psychological

immersion for the participants.

A further remark on the term “fidelity” is that

physical and psychological fidelity are not mutually exclusive nor

competitive, but are actually complementary to each other.17 18 19 20 For

example, a high-fidelity manikin who can talk or moan can enhance

psychological stimuli for the participants.

Some of the learning outcomes of the course are

closely related to psychological fidelity, namely teamwork, working under

stress, identifying one’s own weakness, acute patient management, and

situation awareness. In the written responses under these headings, we

could see interaction, flow of information, stress, and self-reflection.

Gaps in undergraduate medical education

Although not the original objective of this study,

we found that the unexpected learning outcomes of this study echoed some

of the identified gaps between transitions from undergraduate medical

education to early postgraduate medical practice in the literature. In the

medical education literature there have been calls worldwide for reform of

undergraduate medical curricula, to address gaps identified in

undergraduate medical education that adversely affect medical interns in

their early practice.21 22 23 24 25 These

gaps include working under stress, working on call, uncertainties,

helplessness, workload, difficulties prescribing medication, and managing

acutely ill patients, and similar gaps have been identified in medical

educations programmes across the world.

Newly graduated interns perceive their work to be

stressful and have feelings of being underprepared.23 24 25 Educators have suggested that undergraduate curricula

should prepare medical students to deal with expected stress, such as

facing uncertainty, knowing one’s limitations, and asserting one’s right

for support.21 26 27 These

wordings or identified gaps were found in the perceived learning outcomes

of our course. In the literature, interns have indicated that they could

not predict their shortcomings in their undergraduate study until they

were in clinical practice. Furthermore, it was also suggested that

undergraduate medical education should include more on communication

skills and emotional involvement.21

28 29

Again these aspects match the perceived learning outcomes of our course.

More work-related training should be put in the

final year of medical school, particularly for dealing with acutely ill

patients and prescribing medication.30

31 32

Studies have shown that despite curriculum reform, management of acute

problems has remained an unclosed gap.30

33 34

Work-related training with acutely ill patients is difficult to achieve

because such patients are not always readily available even if medical

students do a period of assistant internship. This is a likely reason that

curriculum reform and workplace placements during their final year closed

some gaps but not others.20 21 The opportunities for students to experience real

acute care are limited, making it difficult to build expertise through

repetitive practice.35 36 Furthermore, there is always an ethical consideration

of whether to allow students to treat acutely ill patients.37

The learning outcomes of our study suggest that

high-fidelity simulation training can be a solution to the gaps

identified. High-fidelity simulation training can create a lot of

scenarios with acutely ill patients in a short period of time, to create

an environment in which students can make mistakes and learn from these

mistakes without harming real patients. This idea is supported by the

literature, which demonstrates simulation training is better than other

forms of instruction method such as didactic or problem-based learning and

should serve as an adjunct to other instruction methods.2 38 39 This point is particularly important because interns

or junior doctors are the first medical responders called to attend

acutely deteriorating patient in a ward and their suboptimal management

might put patients at risk or delay appropriate treatment.21 29 The

General Medical Council of the United Kingdom also recommends the use of

simulation technology in medical school.22

The limitations of this study include that data

were collected from only one medical school, the current curriculum was

not discussed, the cohort included students at different times in their

final year, and most (but not all) students were recruited before formal

clinical placement.

Summary

There are gaps between undergraduate medical

education and transition to postgraduate clinical practice which could be

eliminated through reform of undergraduate medical school curricula. The

application of psychologically immersive high-fidelity simulation training

for medical students is likely to be a helpful strategy to enhance their

preparedness. Such training should emphasise management of acutely ill

patients.

Author contributions

All authors had full access to the data,

contributed to the study, approved the final version for publication, and

take responsibility for its accuracy and integrity. YF Choi wrote the

article. All authors contributed to the concept of study, acquisition and

analysis of data, and critical revision for important intellectual

content.

Conflicts of interest

As an editor of the journal, TW Wong was not

involved in the peer review process. The other author has disclosed no

conflicts of interest.

Funding/support

This research received no specific grant from any

funding agency in the public, commercial, or not-for-profit sectors.

Ethics approval

No real patients were involved in this study and no

personal data was collected from the participants. Verbal consent was

obtained from all participants in the introduction session of the course;

participation was non-compulsory. The study was approved by the hospital

ethics committee (Ref HKECREC-2019-013).

References

1. Alessi SM. Fidelity in the design of

instructional simulations. J Comput Based Instr 1988;14:40-7.

2. Maran NJ, Glavin RJ. Low- to high-

fidelity simulation—a continuum of medical education? Med Educ

2003;37(Suppl 1):22-8. Crossref

3. Feinstein AH, Cannon HM. Fidelity,

verifiability, and validity of simulation: constructs for evaluation.

Developments in Business Simulation and Experiential Learning. 2001; 28:

57-65.

4. Mills BW, Carter OB, Rudd CJ, Claxton

LA, Ross NP, Strobel NA. Effects of low- versus high-fidelity simulations

on the cognitive burden and performance of entry-level paramedicine

students: a mixed-methods comparison trial using eye-tracking, continuous

heart rate, difficulty rating scales, video observation and interviews.

Simul Healthc 2016;11:10-8. Crossref

5. Choi YF, Wong TW. High fidelity

simulation training for medical students in emergency medicine specialty

clerkship. Proceedings of the 9th Asia Medical Education Symposium cum

Frontiers in Medical and Healthcare Science Education 2017. OP2.

6. Hays RT, Singer MJ. Simulation Fidelity

in Training System Design: Bridging the Gap between Reality and Training.

New York: Springer-Verlag; 1989. Crossref

7. Diederich E, Mahnken JD, Rigler SK,

Williamson TL, Tarver S, Sharpe MR. The effect of model fidelity on

learning outcomes of a simulation-based education program for central

venous catheter insertion. Simul Healthc 2015;10:360-7. Crossref

8. Matsumoto ED, Hamstra SJ, Radomski SC,

Cusimano MD. The effect of bench model fidelity on endourological skill: a

randomized controlled trial. J Urol 2002;167:1243-7. Crossref

9. Chandra DB, Savoldelli GL, Joo HS, Weiss

ID, Nail VN. Fiberoptic oral intubation: the effect of model fidelity on

training for transfer to patient care. Anesthesiology 2008;109:1007-13. Crossref

10. Grober ED, Hamstra SJ, Wanzel KR, et

al. The educational impact of bench model fidelity on the acquisition of

technical skill: the use of clinically relevant outcome measures. Ann Surg

2004;240:374-81. Crossref

11. Martin EL, Waag WL. Contributions of

platform motion to simulator training effectiveness: Study I—basic

contact. 39. Brooks Air Force Base, Texas Air Force Human Resources

Laboratory; 1978. Crossref

12. Bland AJ, Topping A, Tobbell J. Time

to unravel the conceptual confusion of authenticity and fidelity and their

contribution to learning within simulation-based nursing education. A

discussion paper. Nurse Educ Today 2014;24:1112-8. Crossref

13. Chetwood JD, Grag P, Burton K.

High-fidelity realistic acute medical simulation and SBAR training at a

tertiary hospital in Blantyre, Malawi. Simul Healthc 2018;13:139-45. Crossref

14. Rudolph JW, Simon R, Reamer DB. Which

reality matters? Questions on the path to high engagement in healthcare

simulation. Simul Healthc 2007;2:161-3. Crossref

15. Hamstra SJ, Brydges R, Hatala R,

Zendejas B, Cook DA. Reconsidering fidelity in simulation-based training.

Acad Med 2014;89:387-92. Crossref

16. Rudolph JW, Raemer DB, Simon R.

Establishing a safe container for learning in simulation: the role of the

presimulation briefing. Simul Healthc 2014;9:339-49. Crossref

17. Kozlowski SW, DeShon RP. A

psychological fidelity approach to simulation-based training: theory,

research and principles. In: Elliott LR, Coovert MD, Schiflett SG,

editors. Scaled Worlds: Development, Validation and Applications. New

York: Ashgate Publishing; 2017.

18. Oser RL, Cannon-Bowers J, Sala E,

Dwyer DJ. Enhancing human performance in technology-rich environments:

Guidelines for scenario-based training. Hum Tach Interact Complex Sys

1999;9:175-202.

19. Beaubien JM, Baker DP. The use of

simulation for training teamwork skill in health care: how low can we go?

Qual Saf Health Care 2004;13 Suppl 1:i51-6. Crossref

20. Tun JK, Alinier G, Tang J, Kneebone

RL. Redefining simulation fidelity in healthcare education. Simul Gaming

2015;46:159-74. Crossref

21. Bernnan N, Corrigan O, Allard J, et

al. The transition from medical student to junior doctor: today’s

experiences of tomorrow’s doctors. Med Educ 2010;44:449-58. Crossref

22. General Medical Council. Tomorrow’s

Doctors. London: GMC; 2009.

23. Teo A. The current state of medical

education in Japan: a system under reform. Med Educ 2007;41:302-8. Crossref

24. Segouin C, Jouquan J, Hodges B, et al.

Country report: medical education in France. Med Educ 2007;41:295-301. Crossref

25. Ochsmann EB, Zier U, Drexler H, Schmid

K. Well prepared for work? Junior doctors’ self-assessment after medical

education. BMC Med Educ 2011;11:99. Crossref

26. Miles S, Kellett J, Leinster SJ.

Medical graduates’ preparedness to practice: a comparison of undergraduate

medical school training. BMC Med Educ 2017;17:33. Crossref

27. Lempp H, Cochrane M, Seabrook M, Rees

J. Impact of educational preparation on medical students in transition

from final year to PRHO year: a qualitative evaluation of final-year

training following the introduction of new year 5 curriculum in a London

medical school. Med Teach 2004;26:276-8. Crossref

28. Watmough S, Garden A, Taylor D.

Pre-registration house officers’ views on studying under a reformed

medical curriculum in the UK. Med Educ 2006;40:893-9. Crossref

29. O’Neill PA, Jones A, Willis SC,

McArdle PJ. Does a new undergraduate curriculum based on Tomorrow’s

Doctors prepare house officers better for their first post? A qualitative

study of the views of pre-registration house officers using critical

incidents. Med Educ 2003;37:1100-8. Crossref

30. Illing JC, Peile E, Morrison J, et al.

How prepared are medical graduate to begin practice? A comparison of three

diverse UK medical school. London: General Medical Council; 2008.

31. Smith CM, Perkins GD, Bullock I, Bion

JF. Undergraduate training in the care of the acutely ill patient: a

literature review. Intensive Care Med 2007;33:901-7. Crossref

32. Jensen ML, Hesselfeldt R, Rasmussen

MB, et al. Newly graduated doctors’ competence in managing cardiopulmonary

arrests assessed using a standardized Advanced Life Support (ALS)

assessment. Resuscitation 2008;77:63-8. Crossref

33. Bosch J, Maaz A, Hitzblech T,

Holzhausen Y, Peters H. Medical students’ preparedness for professional

activities in early clerkship. BMC Med Educ 2017;17:140. Crossref

34. Illing JC, Morrow GM, Rothwell nee

Kergon CR, et al. Perception of UK medical graduates’ preparedness for

practice: a multi-centre qualitative study reflecting the importance of

learning on the job. BMC Med Educ 2013;13:34. Crossref

35. Phillips PS, Nolan JP. Training in

basic and advanced life support in UK medical schools: questionnaire

survey. BMJ 2001;323:22-3. Crossref

36. Vozenilek J, Huff JS, Reznek M, Gordon

JA. See one, do one, teach one: advanced technology in medical education.

Acad Emerg Med 2004;11:1149-54. Crossref

37. Ziv A, Wolpe PR, Small SD, Glick S.

Simulation-based medical education: an ethical imperative. Acad Med

2003;78:783-8. Crossref

38. Beal MD, Kinnear J, Anderson CR,

Martin TD, Wamboldt R, Hooper L. The effectiveness of medical simulation

in teaching medical students critical care medicine: A systemic review and

meta-analysis. Simul Healthc 2017;12:104-16. Crossref

39. McCoy CE, Menchine M, Anderson C,

Kollen R, Langdorf MI, Lotfipour S. Prospective randomized crossover study

of simulation vs didactics for teaching medical students the assessment

and management of critically ill patients. J Emerg Med 2011;40:448-55. Crossref